Use VCE Exam Simulator to open VCE files

Get 100% Latest Microsoft Certified: Azure Data Scientist Associate Practice Tests Questions, Accurate & Verified Answers!

30 Days Free Updates, Instant Download!

DP-100 Premium Bundle

Microsoft Certified: Azure Data Scientist Associate Certification Practice Test Questions, Microsoft Certified: Azure Data Scientist Associate Exam Dumps

ExamSnap provides Microsoft Certified: Azure Data Scientist Associate Certification Practice Test Questions and Answers, Video Training Course, Study Guide and 100% Latest Exam Dumps to help you Pass. The Microsoft Certified: Azure Data Scientist Associate Certification Exam Dumps & Practice Test Questions in the VCE format are verified by IT Trainers who have more than 15 year experience in their field. Additional materials include study guide and video training course designed by the ExamSnap experts. So if you want trusted Microsoft Certified: Azure Data Scientist Associate Exam Dumps & Practice Test Questions, then you have come to the right place Read More.

Microsoft Certified Azure Data Scientist Associate Certification – An In-Depth Exploration

In the ever-evolving landscape of technology, data has emerged as the fulcrum upon which modern enterprises pivot. The Microsoft Certified Azure Data Scientist Associate certification represents a distinguished credential within the sphere of cloud-based machine learning, providing aspirants with the acumen to harness Microsoft Azure's vast capabilities. This certification is not merely an emblem of technical proficiency; it embodies a nuanced understanding of data science principles, machine learning workflows, and the application of cloud resources to solve complex business challenges.

Before delving into the specifics of this certification, it is prudent to recognize its foundational counterpart, the Designing and Implementing a Data Science Solution on Azure certification. This preliminary credential serves as an introductory guide, equipping individuals with the fundamental skills necessary to navigate Azure's data ecosystems. While it is not mandatory to attain this prerequisite, engaging with it can provide a lucid comprehension of Azure’s environment, which proves invaluable when approaching the more advanced associate-level examination.

The Azure Data Scientist Associate credential assesses a candidate's capability to undertake multifaceted tasks. These include the orchestration of an Azure Machine Learning workspace, the initiation and monitoring of experiments, the training and optimization of machine learning models, and the deployment and consumption of predictive solutions. The exam does not simply evaluate theoretical knowledge; it emphasizes practical proficiency in implementing scalable and efficient data science solutions within Azure, ensuring that certified professionals can transition seamlessly from concept to execution in real-world scenarios.

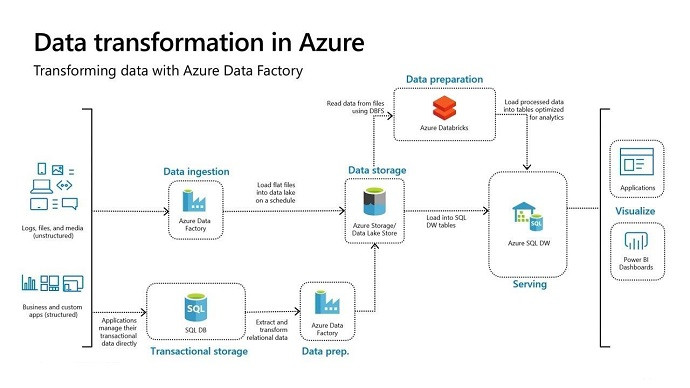

The ubiquity of Microsoft Azure in the enterprise cloud ecosystem is a testament to its versatility and robustness. Organizations worldwide rely on Azure for its comprehensive suite of services that span storage, computation, artificial intelligence, and machine learning. For data scientists, Azure provides an intricate tapestry of tools that facilitate every stage of the data lifecycle—from data ingestion and cleansing to model training and deployment.

Data is often described as the lifeblood of contemporary business, and in an age where approximately 2.5 quintillion bytes of information are generated daily, the capacity to synthesize, analyze, and operationalize data is paramount. Professionals who acquire the Azure Data Scientist Associate certification are adept at converting this immense influx of data into actionable insights, which can guide strategic decision-making and optimize operational efficiencies.

The certification is particularly advantageous in a landscape where enterprises increasingly depend on predictive analytics and intelligent automation. By integrating machine learning models into business processes, organizations can anticipate customer needs, streamline operations, and foster innovation. For instance, a retailer leveraging Azure machine learning can implement predictive purchasing models that alert customers about recurring product needs, thereby enhancing engagement and loyalty. Such applications underscore the transformative potential of certified data scientists who can bridge the gap between raw data and strategic business outcomes.

Acquiring the Azure Data Scientist Associate certification opens a plethora of professional avenues. The credential is recognized across industries as a validation of one's ability to deploy data-driven solutions effectively. Common roles pursued by certified professionals include business intelligence analyst, data mining engineer, data architect, and, naturally, data scientist. Each of these positions demands not only technical prowess but also analytical acuity, problem-solving capabilities, and the ability to communicate complex insights in a coherent and impactful manner.

The demand for professionals proficient in Azure machine learning has been steadily ascending, fueled by the increasing reliance on cloud-based analytics platforms. Data scientists are no longer confined to back-end analysis; they are integral to shaping strategic initiatives and influencing organizational decision-making. Their work can range from designing predictive models that forecast market trends to constructing sophisticated data products that support enterprise-wide applications. The interplay of computer science, mathematics, and statistical reasoning becomes the cornerstone of their daily endeavors, enabling them to translate complex data into practical solutions.

Organizations leveraging Azure machine learning encompass a diverse array of industries. Companies such as 365mc, Aggreko, and Apollo exemplify entities that integrate cloud-based data science into their operations. These enterprises illustrate how certified data scientists can contribute to various domains, from healthcare and energy to finance and logistics. The certification not only equips professionals with technical competencies but also instills the confidence to tackle interdisciplinary challenges, making them invaluable assets in any data-centric environment.

Within the Azure ecosystem, data scientists perform an intricate symphony of tasks that span data collection, processing, model training, and deployment. Their responsibilities extend beyond merely constructing algorithms; they are tasked with designing experiments that yield meaningful insights, optimizing models to ensure accuracy and efficiency, and deploying solutions that seamlessly integrate with existing business processes.

The certification emphasizes the orchestration of Azure Machine Learning workspaces, which serve as collaborative environments for managing datasets, experiments, and models. Candidates learn to configure these workspaces, establish secure compute targets, and manage data objects efficiently. Mastery of these skills ensures that the data scientist can create scalable solutions capable of handling substantial datasets without compromising performance.

Moreover, Azure data scientists are skilled in running experiments that validate model hypotheses and generate quantifiable metrics. This involves not only executing training pipelines but also employing tools that facilitate automated model optimization, hyperparameter tuning, and model interpretation. The ability to deploy models as services and implement batch inference pipelines further underscores the practical applicability of this certification, bridging theoretical knowledge with tangible business impact.

In contemporary enterprises, the capacity to make informed, data-driven decisions is a defining competitive advantage. Data scientists certified in Azure play a pivotal role in this paradigm, providing insights that can influence marketing strategies, operational workflows, and product development. The integration of machine learning models into decision-making processes allows organizations to anticipate trends, mitigate risks, and capitalize on emerging opportunities.

The certification ensures that professionals are equipped to handle the voluminous data characteristic of modern business landscapes. Through proficiency in Azure Machine Learning, candidates learn to manage data pipelines, interpret complex datasets, and construct predictive models that are both accurate and efficient. This capability transforms raw information into a strategic asset, empowering organizations to navigate uncertainty with agility and foresight.

Furthermore, the role of the data scientist is not confined to analytical tasks. They are often responsible for developing data products that interface directly with end-users, such as recommendation engines, forecasting dashboards, and intelligent automation tools. By acquiring the Azure Data Scientist Associate certification, professionals demonstrate a holistic understanding of both the technical and practical dimensions of data science, ensuring their solutions are impactful and sustainable.

Earning the Microsoft Certified Azure Data Scientist Associate certification requires more than theoretical knowledge; it demands a sophisticated understanding of the Azure Machine Learning ecosystem. One of the most pivotal competencies assessed in this certification revolves around creating and managing an Azure Machine Learning workspace. This workspace acts as the central hub where data, experiments, and models converge, enabling data scientists to orchestrate machine learning pipelines efficiently.

Candidates are expected to demonstrate the ability to configure workspace settings, ensuring that the environment is optimized for collaborative projects. This involves establishing secure datastores, registering datasets, and maintaining data integrity throughout the lifecycle of a project. Proficiency in managing compute resources is also critical. Data scientists must select appropriate compute instances for different workloads, create compute targets for experiments, and ensure that resources are utilized optimally. These tasks form the foundation of an efficient workflow, allowing professionals to navigate the complexities of data management within Azure.

Another major competency assessed in the certification is the capacity to design, execute, and monitor experiments that train machine learning models. This skill requires familiarity with Azure Machine Learning Designer and the Azure Machine Learning SDK. Through these tools, data scientists can create sophisticated training pipelines, ingest data efficiently, and define data flow within designer modules. The integration of custom code modules enhances flexibility, allowing the implementation of tailored algorithms to solve specific business problems.

Experimentation is not merely about running models; it involves meticulous planning and iterative refinement. Candidates learn to configure run settings for scripts, utilize datasets effectively, and generate comprehensive metrics that evaluate model performance. This process enables professionals to troubleshoot errors, assess results, and make informed decisions about the subsequent steps in model development. Automating training pipelines ensures reproducibility and scalability, equipping data scientists to handle extensive projects without sacrificing accuracy or efficiency.

Optimization is a critical aspect of machine learning, and the certification emphasizes the importance of refining models to achieve the best possible performance. Candidates are evaluated on their ability to use Automated Machine Learning interfaces, both through the Azure Machine Learning studio and the SDK. This approach streamlines model creation by automating algorithm selection, data preprocessing, and hyperparameter tuning, allowing data scientists to focus on interpretation and strategic adjustments.

Hyperparameter tuning using Hyperdrive is another key skill. Professionals are expected to define search spaces, select sampling methods, and establish primary metrics to guide the optimization process. They also employ early termination strategies to conserve resources while maximizing efficiency. Beyond optimization, interpreting models using explainers provides transparency, enabling stakeholders to understand which features influence predictions. This interpretability is vital for building trust in machine learning solutions, particularly in business contexts where decisions may have significant financial or operational implications.

Managing models extends beyond creation and optimization. Candidates must register trained models, monitor their usage, and track data drift to ensure that deployed solutions remain accurate and relevant over time. This continuous oversight is essential in dynamic environments where input data may evolve, necessitating timely recalibration of models. The ability to maintain and manage machine learning solutions within Azure reflects a mature understanding of both technical and operational dimensions of data science.

The final domain assessed in the certification revolves around the deployment and consumption of machine learning models. Professionals must be adept at creating production-ready compute targets that balance security, performance, and cost-efficiency. Selecting appropriate deployment options and configuring settings for services ensures that models operate seamlessly in real-world scenarios.

Deploying models as services entails a series of tasks, including troubleshooting container issues, configuring endpoints, and ensuring that the deployed solutions integrate smoothly with existing applications. Data scientists also create pipelines for batch inferencing, publishing them to generate outputs efficiently. In addition, designer pipelines can be converted into web services, enabling end-users or other applications to consume predictions in real-time. This stage underscores the practical applicability of the certification, emphasizing not only technical proficiency but also the ability to deliver tangible business value through deployed models.

The competencies evaluated in the certification are not confined to hypothetical scenarios; they translate directly into impactful applications across industries. For instance, a healthcare provider might deploy predictive models to anticipate patient admissions, optimize resource allocation, and enhance care quality. In the finance sector, predictive algorithms can assess credit risk, detect anomalies, and guide investment strategies. Manufacturing firms utilize machine learning pipelines to forecast demand, optimize supply chains, and reduce operational inefficiencies. These applications demonstrate how mastery of Azure Machine Learning workspaces, experimentation, model optimization, and deployment translates into actionable insights and measurable business outcomes.

The ability to manage end-to-end machine learning workflows within Azure ensures that certified data scientists can bridge the gap between raw data and actionable intelligence. This comprehensive skill set enhances employability, allowing professionals to contribute meaningfully to organizations seeking to leverage cloud-based machine learning solutions. Beyond technical skills, these competencies cultivate a mindset of continuous improvement, adaptability, and analytical rigor, all of which are essential for long-term success in the data science domain.

The certification emphasizes a balance between theoretical understanding and practical application. Knowledge of algorithms, statistical principles, and machine learning theory forms the intellectual bedrock upon which practical skills are built. However, the true measure of proficiency lies in the ability to implement these principles effectively within Azure’s ecosystem. From configuring compute targets to automating training pipelines, each task requires a synthesis of conceptual insight and technical dexterity.

Practical exercises, such as creating experiments and deploying models, reinforce learning by translating abstract concepts into concrete actions. This approach ensures that candidates are not only familiar with Azure’s tools but are also capable of applying them to solve nuanced business problems. The integration of hands-on practice with theoretical comprehension cultivates a holistic skill set, preparing professionals to tackle challenges that extend beyond the confines of the certification exam.

Mastery of the skills assessed in the certification also involves strategic thinking. Data scientists must determine the most effective methods for data preprocessing, model selection, and experiment design. They must anticipate potential pitfalls, optimize resource utilization, and interpret outputs in a manner that informs decision-making. This strategic lens differentiates proficient practitioners from those who simply execute tasks mechanically, highlighting the certification’s emphasis on analytical sophistication and operational foresight.

Moreover, understanding the nuances of Azure Machine Learning’s capabilities allows professionals to innovate within the platform. They can leverage automated tools for efficiency, customize workflows to address unique business needs, and ensure that models remain accurate and reliable over time. Such expertise underscores the value of the certification as a marker of advanced competence in the intersection of cloud computing, machine learning, and applied data science.

The skills honed through preparation for the Microsoft Certified Azure Data Scientist Associate certification carry enduring professional value. Certified professionals possess the capacity to navigate complex data environments, implement robust machine learning solutions, and translate analytical insights into strategic initiatives. These abilities are increasingly sought after across sectors, from healthcare and finance to manufacturing and technology, reflecting the ubiquitous relevance of data-driven decision-making.

By cultivating expertise in Azure Machine Learning workspaces, experiment execution, model optimization, and deployment, certified data scientists contribute to organizational agility, efficiency, and innovation. They become agents of transformation, capable of leveraging data to anticipate trends, mitigate risks, and unlock new opportunities. The certification thus represents not only a milestone in professional development but also a gateway to impactful and influential roles in the contemporary data ecosystem.

Embarking on the journey toward the Microsoft Certified Azure Data Scientist Associate certification requires a structured approach to learning, one that blends conceptual understanding with practical application. Microsoft has crafted a deliberate learning path designed to equip professionals with the competencies needed to excel in the dynamic realm of machine learning within Azure. This pathway encompasses a series of interconnected courses that gradually build expertise, beginning with foundational concepts and culminating in advanced application techniques.

The learning path is accessible to individuals with varying degrees of familiarity with Azure. For those new to the ecosystem, the initial modules provide an introduction to core services, illustrating how Azure facilitates data ingestion, processing, and experimentation. These introductory courses often cover essentials such as workspace creation, dataset management, and compute configuration. They offer a lucid framework for understanding how machine learning operations are structured and executed in a cloud environment.

Intermediate and advanced modules delve deeper into specialized competencies, including the orchestration of complex pipelines, model optimization using automated tools, and the deployment of models as services. The learning path emphasizes hands-on exercises, allowing aspirants to experiment with real datasets, construct pipelines, and interpret results within an authentic Azure environment. This practical focus ensures that learners can translate theoretical knowledge into tangible capabilities, preparing them for both the certification examination and real-world professional scenarios.

Microsoft provides multiple modes of learning, including self-paced online courses and instructor-led training. Free modules typically introduce fundamental concepts and workflows, offering learners an accessible entry point without financial commitment. Paid courses, on the other hand, provide comprehensive coverage of all exam objectives, often accompanied by extensive hands-on labs, practical exercises, and evaluation tools to reinforce understanding. By navigating this layered approach, candidates can progressively consolidate their skills while ensuring alignment with the certification requirements.

Effective preparation for the Azure Data Scientist Associate certification relies on the combination of guided instruction and self-directed exploration. Structured guidance ensures that learners encounter concepts in a logical sequence, gradually building complexity while reinforcing core competencies. Self-paced learning, meanwhile, allows individuals to explore areas of personal interest or weakness in greater depth, fostering a sense of autonomy and reinforcing engagement with the material.

Interactive exercises and laboratory sessions are integral to this learning path. They simulate real-world scenarios, encouraging learners to manipulate datasets, configure experiments, and deploy models in a controlled environment. These experiences cultivate familiarity with Azure Machine Learning services, instilling confidence in navigating the workspace, selecting appropriate compute resources, and executing experiments efficiently. Over time, these hands-on exercises develop a nuanced understanding of the platform, enabling aspirants to anticipate potential challenges and apply strategic solutions.

The learning path also encourages iterative exploration. Candidates are prompted to revisit modules, refine workflows, and analyze the outcomes of various experiment configurations. This iterative approach mirrors the cyclical nature of real-world data science, where repeated experimentation and optimization are crucial to deriving accurate and reliable insights. By embracing this methodology, learners cultivate analytical dexterity and a pragmatic understanding of model development within Azure.

While the Microsoft learning path offers a structured roadmap, supplementary courses from external providers can enhance preparation and provide alternative perspectives. It is imperative to select courses that comprehensively cover the skills assessed in the certification. High-quality offerings typically include detailed instruction on workspace management, experiment execution, model optimization, and deployment, ensuring that candidates are exposed to all domains evaluated in the exam.

Courses that integrate extensive hands-on labs are particularly valuable. They allow learners to apply theoretical concepts in practical scenarios, reinforcing understanding and building confidence in executing tasks independently. Additionally, access to practice exams provides insight into the structure and difficulty of certification questions, helping candidates develop effective time management strategies and exam familiarity. The combination of guided instruction, practical exercises, and evaluative tools cultivates a holistic understanding, aligning closely with the objectives of the certification.

These programs often offer end-to-end coverage, from the fundamentals of Azure Machine Learning to advanced experimentation and deployment techniques. By engaging with such courses, learners gain exposure to a breadth of scenarios and problem-solving approaches, equipping them to tackle both straightforward and nuanced challenges encountered during certification and professional practice.

One of the distinguishing characteristics of the learning path is its emphasis on bridging theoretical knowledge with applied experience. Understanding the underlying principles of machine learning, statistical analysis, and model evaluation is crucial, yet proficiency in a real-world context requires more than conceptual insight. The learning path and recommended courses collectively emphasize the application of these principles within the Azure ecosystem, enabling learners to construct, optimize, and deploy models effectively.

Practical exercises often include the creation of pipelines for training and batch inferencing, experimentation with automated model optimization, and the deployment of models as web services. Learners also engage with model interpretability tools, gaining the ability to explain predictions and assess feature importance. This applied dimension ensures that candidates can translate abstract knowledge into functional solutions, a competency that is central to both certification success and professional achievement.

Moreover, exposure to diverse datasets and problem contexts fosters adaptability. By encountering scenarios ranging from predictive maintenance to customer behavior modeling, learners develop the capacity to generalize their skills across domains. This versatility is invaluable in a professional environment where data science tasks vary widely and require the synthesis of multiple technical and analytical competencies.

Consistent and structured practice is indispensable when preparing for the certification. Candidates are encouraged to simulate real-world workflows, iteratively refining models, troubleshooting errors, and evaluating performance metrics. This disciplined approach builds confidence and resilience, enabling learners to navigate complex machine learning processes with precision and agility.

In addition to technical practice, engaging with community forums, study groups, and discussion platforms can reinforce learning. Sharing insights, exchanging strategies, and analyzing problem-solving approaches provides exposure to diverse perspectives and enhances critical thinking. Such collaborative learning enriches the preparation experience, fostering a deeper comprehension of Azure Machine Learning concepts and their practical application.

To maximize the efficacy of the learning path and courses, candidates should adopt a multifaceted approach. Establishing a structured study schedule ensures consistent engagement and progressive skill acquisition. Beginning with foundational modules before advancing to complex experimentation and deployment workflows allows learners to scaffold their knowledge systematically.

Integration of hands-on labs with theoretical review is crucial. Practicing experiment configuration, model training, and deployment reinforces the conceptual underpinnings of machine learning while developing operational fluency. Additionally, periodic self-assessment through practice tests enables candidates to identify areas requiring further focus, calibrate their understanding, and enhance performance under exam conditions.

Learners should also cultivate adaptability and analytical discernment. Encountering unforeseen errors, dataset inconsistencies, or performance anomalies is inherent to data science workflows. The ability to diagnose, interpret, and rectify these challenges is a hallmark of proficiency, reflecting not only technical competence but also critical thinking and problem-solving acumen.

Engaging with the learning path and recommended courses confers enduring professional advantages beyond the certification itself. Certified professionals develop a comprehensive mastery of Azure Machine Learning tools, experiment management, model optimization, and deployment practices. This proficiency equips them to navigate the rapidly evolving data landscape, delivering impactful insights and solutions across diverse industries.

Organizations increasingly seek professionals who can translate complex data into actionable intelligence, automate workflows, and implement scalable machine learning models. The competencies acquired through the learning path enable certified data scientists to fulfill these expectations, positioning them as integral contributors to strategic initiatives and innovation.

Furthermore, the iterative learning methodology fosters a mindset of continuous improvement. Professionals who engage deeply with both theoretical and practical dimensions of machine learning are better prepared to adapt to emerging technologies, refine workflows, and maintain model efficacy over time. This adaptability ensures sustained relevance and competitiveness in a field characterized by rapid technological advancement.

Effective Preparation for Azure Data Scientist Associate Certification

Preparation for the Microsoft Certified Azure Data Scientist Associate certification demands a harmonious blend of theoretical comprehension, practical application, and strategic planning. Achieving success in the examination is not merely a function of memorizing workflows or concepts; it requires a profound understanding of the Azure Machine Learning ecosystem, its tools, and how machine learning principles manifest in real-world scenarios.

For professionals new to Azure, the initial focus should be on familiarizing themselves with core services such as workspace configuration, dataset management, and compute resources. This foundational knowledge forms the bedrock upon which more advanced competencies can be built. Engaging with the optional Designing and Implementing a Data Science Solution on Azure course can provide a lucid introduction to these concepts, offering an accessible pathway to understanding the interplay between data ingestion, model training, and deployment within a cloud environment.

Those with prior experience in Azure should focus on refining existing skills and exploring advanced functionalities. This includes designing complex machine learning pipelines, optimizing models using automated techniques, and deploying models in production environments. Practical experience is invaluable, as the certification assesses not only conceptual understanding but also the ability to implement solutions efficiently and accurately. Hands-on experimentation with datasets, pipelines, and deployment scenarios develops both confidence and technical dexterity, enabling candidates to navigate unexpected challenges during the exam.

A methodical approach to preparation can enhance retention and performance. Candidates are encouraged to develop a structured study schedule that balances time between learning concepts, performing hands-on exercises, and self-assessment. Beginning with fundamental principles and progressively advancing to more intricate topics allows learners to scaffold their knowledge, ensuring a robust understanding of machine learning workflows within Azure.

Hands-on labs play a pivotal role in reinforcing theoretical knowledge. By constructing and running experiments, configuring compute targets, and deploying models, learners acquire a visceral understanding of Azure’s capabilities. This experiential learning mirrors real-world scenarios, cultivating problem-solving acumen and operational competence. Additionally, exploring model interpretability, feature importance, and optimization techniques ensures that candidates can apply insights effectively, a critical component of both the examination and professional practice.

Incorporating iterative practice is essential. Revisiting modules, refining experiment configurations, and analyzing results multiple times helps reinforce knowledge while developing analytical agility. This cyclical approach aligns with the dynamic nature of data science, where repeated experimentation and refinement are necessary to achieve accurate, reliable, and efficient models.

Practice tests constitute an invaluable resource for candidates preparing for the certification. They offer a realistic preview of the exam structure, including multiple-choice questions, drag-and-drop exercises, case studies, and scenarios requiring multiple responses. Engaging with practice tests allows learners to calibrate their time management, assess comprehension of key topics, and identify areas that require additional focus.

Evaluation tools, including quizzes and interactive exercises, further reinforce learning by providing immediate feedback. By analyzing incorrect responses, candidates can discern gaps in understanding, refine strategies, and approach subsequent exercises with enhanced insight. This iterative evaluation not only improves technical proficiency but also cultivates confidence, ensuring that learners are well-prepared for the examination environment.

Success in the certification examination extends beyond technical execution; it necessitates analytical sophistication and strategic thinking. Candidates must determine optimal methods for data preprocessing, model selection, and experiment orchestration. Anticipating potential pitfalls, interpreting outputs accurately, and adjusting workflows accordingly are critical skills that distinguish proficient practitioners.

The certification emphasizes the ability to implement end-to-end machine learning solutions within Azure. From configuring workspaces and managing datasets to optimizing models and deploying services, candidates must navigate a complex sequence of tasks efficiently. Strategic insight allows professionals to approach these workflows with foresight, ensuring resource efficiency, model accuracy, and operational reliability. Developing this level of analytical acumen requires deliberate practice, critical reflection, and engagement with diverse problem scenarios.

The certification examination spans a duration of approximately 120 minutes and encompasses a range of question formats designed to evaluate both conceptual knowledge and practical proficiency. Candidates encounter multiple-choice questions, case studies, drag-and-drop exercises, and scenarios requiring multiple responses. This varied format tests the ability to apply machine learning principles within Azure, assess outcomes, and make informed decisions under time constraints.

The examination includes between forty to sixty questions, with a passing score of 700. It is offered in multiple languages, including English, Japanese, Simplified Chinese, Korean, German, Traditional Chinese, French, Spanish, Portuguese for Brazil, Russian, Arabic for Saudi Arabia, Italian, and Indonesian, reflecting its global relevance and accessibility. The examination fee is approximately one hundred sixty-five US dollars, and the certification remains valid for twelve months, underscoring the importance of timely renewal and continued professional development.

While prior certification is not required, familiarity with data science principles and practical experience using Azure Machine Learning significantly enhances readiness for the exam. Candidates benefit from experience in managing workspaces, configuring compute resources, designing experiments, and deploying models. Exposure to tools such as MLflow and Azure Machine Learning Designer cultivates a deeper understanding of workflows and ensures that aspirants can implement solutions efficiently in real-world contexts.

Practical experience also facilitates problem-solving under uncertainty, a critical aspect of both the exam and professional practice. Candidates who have engaged with diverse datasets and experimented with various pipelines develop an intuitive grasp of how to optimize models, interpret results, and troubleshoot challenges. This experiential knowledge provides a competitive advantage, enabling candidates to approach the examination with confidence and composure.

The interplay between theoretical knowledge and applied experience is central to preparation for the certification. Understanding algorithms, statistical models, and evaluation metrics provides the conceptual foundation, while hands-on experimentation reinforces comprehension and builds operational fluency. Candidates who integrate these dimensions develop a holistic understanding of machine learning workflows within Azure, ensuring that their solutions are both technically sound and practically applicable.

Structured practice in managing workspaces, running experiments, optimizing models, and deploying services consolidates this integration. Candidates learn to anticipate workflow challenges, configure pipelines efficiently, and interpret outputs accurately. This combination of theory and practice cultivates a versatile skill set, equipping candidates to address complex data science challenges both in the exam and in professional environments.

Effective time management is an essential component of exam preparation. Candidates should allocate sufficient time to review each domain, engage with hands-on exercises, and complete practice tests under timed conditions. Developing strategies for prioritizing questions, managing complex scenarios, and allocating attention efficiently enhances performance during the examination.

Exam readiness also involves cultivating resilience and confidence. Engaging with practice scenarios, reflecting on problem-solving approaches, and iteratively refining workflows helps candidates approach the exam with a composed and analytical mindset. Familiarity with the Azure interface, workflow configuration, and model deployment reduces uncertainty, allowing professionals to focus on applying their knowledge rather than navigating unfamiliar tools.

The preparation strategies employed for the certification yield benefits that extend beyond exam success. Professionals who immerse themselves in the Azure Machine Learning ecosystem acquire enduring competencies in workspace management, experimentation, model optimization, and deployment. These skills are directly applicable in professional roles, enabling certified data scientists to contribute strategically to organizational goals, implement scalable solutions, and drive data-driven decision-making.

By internalizing both theoretical principles and practical workflows, certified professionals are well-equipped to navigate evolving technological landscapes. Their expertise positions them as valuable contributors across sectors such as healthcare, finance, manufacturing, and technology, where data-driven insights are central to operational efficiency and innovation. The certification thus serves as both a milestone in professional development and a catalyst for sustained career growth.

The Microsoft Certified Azure Data Scientist Associate certification represents a distinguished milestone for professionals seeking to master machine learning within the Microsoft Azure ecosystem. It encompasses a spectrum of competencies, from establishing and managing Azure Machine Learning workspaces to conducting experiments, optimizing models, and deploying solutions that deliver tangible business impact. The certification emphasizes a seamless integration of theoretical understanding and practical application, ensuring that certified professionals can translate complex datasets into actionable insights and strategic decisions. Through a structured learning path, aspirants gain exposure to foundational concepts, advanced experimentation, and deployment workflows, supplemented by hands-on exercises and practical labs that reinforce technical proficiency. Preparation strategies involve iterative practice, engagement with realistic datasets, and self-assessment, cultivating analytical dexterity, problem-solving acumen, and operational competence. The knowledge acquired extends beyond the examination itself, equipping professionals to implement scalable machine learning solutions, interpret model outcomes, and manage data pipelines in dynamic enterprise environments. Mastery of these skills positions certified data scientists as invaluable contributors across industries, capable of driving innovation, enhancing operational efficiency, and enabling data-driven decision-making. Ultimately, the certification not only validates technical expertise but also fosters strategic insight, adaptability, and professional growth, empowering individuals to navigate the evolving landscape of cloud-based analytics and make a lasting impact in the field of data science.

Study with ExamSnap to prepare for Microsoft Certified: Azure Data Scientist Associate Practice Test Questions and Answers, Study Guide, and a comprehensive Video Training Course. Powered by the popular VCE format, Microsoft Certified: Azure Data Scientist Associate Certification Exam Dumps compiled by the industry experts to make sure that you get verified answers. Our Product team ensures that our exams provide Microsoft Certified: Azure Data Scientist Associate Practice Test Questions & Exam Dumps that are up-to-date.

Microsoft Training Courses

SPECIAL OFFER: GET 10% OFF

This is ONE TIME OFFER

A confirmation link will be sent to this email address to verify your login. *We value your privacy. We will not rent or sell your email address.

Download Free Demo of VCE Exam Simulator

Experience Avanset VCE Exam Simulator for yourself.

Simply submit your e-mail address below to get started with our interactive software demo of your free trial.