DP-203: Data Engineering on Microsoft Azure

PDFs and exam guides are not so efficient, right? Prepare for your Microsoft examination with our training course. The DP-203 course contains a complete batch of videos that will provide you with profound and thorough knowledge related to Microsoft certification exam. Pass the Microsoft DP-203 test with flying colors.

Curriculum for DP-203 Certification Video Course

| Name of Video | Time |

|---|---|

1. IMPORTANT - How we are going to approach the exam objectives |

3:00 |

2. OPTIONAL - Overview of Azure |

2:00 |

3. OPTIONAL - Concepts in Azure |

4:00 |

4. Azure Free Account |

5:00 |

5. Creating an Azure Free Account |

5:00 |

6. OPTIONAL - Quick tour of the Azure Portal |

6:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

2:00 |

2. Understanding data |

4:00 |

3. Example of data storage |

2:00 |

4. Lab - Azure Storage accounts |

6:00 |

5. Lab - Azure SQL databases |

15:00 |

6. A quick note when it comes to the Azure Free Account |

4:00 |

7. Lab - Application connecting to Azure Storage and SQL database |

11:00 |

8. Different file formats |

7:00 |

9. Azure Data Lake Gen-2 storage accounts |

3:00 |

10. Lab - Creating an Azure Data Lake Gen-2 storage account |

9:00 |

11. Using PowerBI to view your data |

7:00 |

12. Lab - Authorizing to Azure Data Lake Gen 2 - Access Keys - Storage Explorer |

6:00 |

13. Lab - Authorizing to Azure Data Lake Gen 2 - Shared Access Signatures |

8:00 |

14. Azure Storage Account - Redundancy |

11:00 |

15. Azure Storage Account - Access tiers |

9:00 |

16. Azure Storage Account - Lifecycle policy |

3:00 |

17. Note on Costing |

5:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

2:00 |

2. The internals of a database engine |

4:00 |

3. Lab - Setting up a new Azure SQL database |

3:00 |

4. Lab - T-SQL - SELECT clause |

3:00 |

5. Lab - T-SQL - WHERE clause |

3:00 |

6. Lab - T-SQL - ORDER BY clause |

1:00 |

7. Lab - T-SQL - Aggregate Functions |

1:00 |

8. Lab - T-SQL - GROUP BY clause |

4:00 |

9. Lab - T-SQL - HAVING clause |

1:00 |

10. Quick Review on Primary and Foreign Keys |

4:00 |

11. Lab - T-SQL - Creating Tables with Keys |

3:00 |

12. Lab - T-SQL - Table Joins |

5:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

2:00 |

2. Why do we need a data warehouse |

10:00 |

3. Welcome to Azure Synapse Analytics |

2:00 |

4. Lab - Let's create a Azure Synapse workspace |

3:00 |

5. Azure Synapse - Compute options |

3:00 |

6. Using External tables |

4:00 |

7. Lab - Using External tables - Part 1 |

9:00 |

8. Lab - Using External tables - Part 2 |

12:00 |

9. Lab - Creating a SQL pool |

7:00 |

10. Lab - SQL Pool - External Tables - CSV |

9:00 |

11. Data Cleansing |

4:00 |

12. Lab - SQL Pool - External Tables - CSV with formatted data |

3:00 |

13. Lab - SQL Pool - External Tables - Parquet - Part 1 |

4:00 |

14. Lab - SQL Pool - External Tables - Parquet - Part 2 |

7:00 |

15. Loading data into the Dedicated SQL Pool |

2:00 |

16. Lab - Loading data into a table - COPY Command - CSV |

11:00 |

17. Lab - Loading data into a table - COPY Command - Parquet |

3:00 |

18. Pausing the Dedicated SQL pool |

3:00 |

19. Lab - Loading data using PolyBase |

5:00 |

20. Lab - BULK INSERT from Azure Synapse |

6:00 |

21. My own experience |

6:00 |

22. Designing a data warehouse |

11:00 |

23. More on dimension tables |

5:00 |

24. Lab - Building a data warehouse - Setting up the database |

6:00 |

25. Lab - Building a Fact Table |

8:00 |

26. Lab - Building a dimension table |

6:00 |

27. Lab - Transfer data to our SQL Pool |

15:00 |

28. Other points in the copy activity |

2:00 |

29. Lab - Using Power BI for Star Schema |

6:00 |

30. Understanding Azure Synapse Architecture |

7:00 |

31. Understanding table types |

7:00 |

32. Understanding Round-Robin tables |

5:00 |

33. Lab - Creating Hash-distributed Tables |

5:00 |

34. Note on creating replicated tables |

1:00 |

35. Designing your tables |

4:00 |

36. Designing tables - Review |

4:00 |

37. Lab - Example when using the right distributions for your tables |

10:00 |

38. Points on tables in Azure Synapse |

2:00 |

39. Lab - Windowing Functions |

4:00 |

40. Lab - Reading JSON files |

5:00 |

41. Lab - Surrogate keys for dimension tables |

6:00 |

42. Slowly Changing dimensions |

4:00 |

43. Type 3 Slowly Dimension dimension |

2:00 |

44. Creating a heap table |

3:00 |

45. Snowflake schema |

1:00 |

46. Lab - CASE statement |

6:00 |

47. Partitions in Azure Synapse |

2:00 |

48. Lab - Creating a table with partitions |

11:00 |

49. Lab - Switching partitions |

7:00 |

50. Indexes |

6:00 |

51. Quick Note - Modern Data Warehouse Architecture |

2:00 |

52. Quick Note on what we are taking forward to the next sections |

2:00 |

53. What about the Spark Pool |

2:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

1:00 |

2. Extract, Transform and Load |

2:00 |

3. What is Azure Data Factory |

5:00 |

4. Starting with Azure Data Factory |

2:00 |

5. Lab - Azure Data Lake to Azure Synapse - Log.csv file |

13:00 |

6. Lab - Azure Data Lake to Azure Synapse - Parquet files |

13:00 |

7. Lab - The case with escape characters |

8:00 |

8. Review on what has been done so far |

6:00 |

9. Lab - Generating a Parquet file |

5:00 |

10. Lab - What about using a query for data transfer |

6:00 |

11. Deleting artefacts in Azure Data Factory |

3:00 |

12. Mapping Data Flow |

5:00 |

13. Lab - Mapping Data Flow - Fact Table |

14:00 |

14. Lab - Mapping Data Flow - Dimension Table - DimCustomer |

15:00 |

15. Lab - Mapping Data Flow - Dimension Table - DimProduct |

10:00 |

16. Lab - Surrogate Keys - Dimension tables |

4:00 |

17. Lab - Using Cache sink |

9:00 |

18. Lab - Handling Duplicate rows |

8:00 |

19. Note - What happens if we don't have any data in our DimProduct table |

4:00 |

20. Changing connection details |

1:00 |

21. Lab - Changing the Time column data in our Log.csv file |

8:00 |

22. Lab - Convert Parquet to JSON |

5:00 |

23. Lab - Loading JSON into SQL Pool |

5:00 |

24. Self-Hosted Integration Runtime |

3:00 |

25. Lab - Self-Hosted Runtime - Setting up nginx |

9:00 |

26. Lab - Self-Hosted Runtime - Setting up the runtime |

7:00 |

27. Lab - Self-Hosted Runtime - Copy Activity |

7:00 |

28. Lab - Self-Hosted Runtime - Mapping Data Flow |

16:00 |

29. Lab - Processing JSON Arrays |

8:00 |

30. Lab - Processing JSON Objects |

6:00 |

31. Lab - Conditional Split |

6:00 |

32. Lab - Schema Drift |

12:00 |

33. Lab - Metadata activity |

14:00 |

34. Lab - Azure DevOps - Git configuration |

11:00 |

35. Lab - Azure DevOps - Release configuration |

11:00 |

36. What resources are we taking forward |

1:00 |

| Name of Video | Time |

|---|---|

1. Batch and Real-Time Processing |

5:00 |

2. What are Azure Event Hubs |

5:00 |

3. Lab - Creating an instance of Event hub |

7:00 |

4. Lab - Sending and Receiving Events |

10:00 |

5. What is Azure Stream Analytics |

2:00 |

6. Lab - Creating a Stream Analytics job |

4:00 |

7. Lab - Azure Stream Analytics - Defining the job |

10:00 |

8. Review on what we have seen so far |

8:00 |

9. Lab - Reading database diagnostic data - Setup |

4:00 |

10. Lab - Reading data from a JSON file - Setup |

6:00 |

11. Lab - Reading data from a JSON file - Implementation |

5:00 |

12. Lab - Reading data from the Event Hub - Setup |

7:00 |

13. Lab - Reading data from the Event Hub - Implementation |

8:00 |

14. Lab - Timing windows |

10:00 |

15. Lab - Adding multiple outputs |

4:00 |

16. Lab - Reference data |

5:00 |

17. Lab - OVER clause |

8:00 |

18. Lab - Power BI Output |

10:00 |

19. Lab - Reading Network Security Group Logs - Server Setup |

3:00 |

20. Lab - Reading Network Security Group Logs - Enabling NSG Flow Logs |

8:00 |

21. Lab - Reading Network Security Group Logs - Processing the data |

13:00 |

22. Lab - User Defined Functions |

9:00 |

23. Custom Serialization Formats |

3:00 |

24. Lab - Azure Event Hubs - Capture Feature |

7:00 |

25. Lab - Azure Data Factory - Incremental Data Copy |

11:00 |

26. Demo on Azure IoT Devkit |

5:00 |

27. What resources are we taking forward |

1:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

2:00 |

2. Introduction to Scala |

2:00 |

3. Installing Scala |

6:00 |

4. Scala - Playing with values |

3:00 |

5. Scala - Installing IntelliJ IDE |

5:00 |

6. Scala - If construct |

3:00 |

7. Scala - for construct |

1:00 |

8. Scala - while construct |

1:00 |

9. Scala - case construct |

1:00 |

10. Scala - Functions |

2:00 |

11. Scala - List collection |

4:00 |

12. Starting with Python |

3:00 |

13. Python - A simple program |

2:00 |

14. Python - If construct |

1:00 |

15. Python - while construct |

1:00 |

16. Python - List collection |

2:00 |

17. Python - Functions |

2:00 |

18. Quick look at Jupyter Notebook |

4:00 |

19. Lab - Azure Synapse - Creating a Spark pool |

8:00 |

20. Lab - Spark Pool - Starting out with Notebooks |

9:00 |

21. Lab - Spark Pool - Spark DataFrames |

4:00 |

22. Lab - Spark Pool - Sorting data |

6:00 |

23. Lab - Spark Pool - Load data |

8:00 |

24. Lab - Spark Pool - Removing NULL values |

8:00 |

25. Lab - Spark Pool - Using SQL statements |

3:00 |

26. Lab - Spark Pool - Write data to Azure Synapse |

11:00 |

27. Spark Pool - Combined Power |

2:00 |

28. Lab - Spark Pool - Sharing tables |

4:00 |

29. Lab - Spark Pool - Creating tables |

5:00 |

30. Lab - Spark Pool - JSON files |

6:00 |

| Name of Video | Time |

|---|---|

1. What is Azure Databricks |

4:00 |

2. Clusters in Azure Databricks |

6:00 |

3. Lab - Creating a workspace |

3:00 |

4. Lab - Creating a cluster |

14:00 |

5. Lab - Simple notebook |

3:00 |

6. Lab - Using DataFrames |

4:00 |

7. Lab - Reading a CSV file |

4:00 |

8. Databricks File System |

2:00 |

9. Lab - The SQL Data Frame |

3:00 |

10. Visualizations |

1:00 |

11. Lab - Few functions on dates |

2:00 |

12. Lab - Filtering on NULL values |

2:00 |

13. Lab - Parquet-based files |

2:00 |

14. Lab - JSON-based files |

3:00 |

15. Lab - Structured Streaming - Let's first understand our data |

3:00 |

16. Lab - Structured Streaming - Streaming from Azure Event Hubs - Initial steps |

8:00 |

17. Lab - Structured Streaming - Streaming from Azure Event Hubs - Implementation |

10:00 |

18. Lab - Getting data from Azure Data Lake - Setup |

7:00 |

19. Lab - Getting data from Azure Data Lake - Implementation |

5:00 |

20. Lab - Writing data to Azure Synapse SQL Dedicated Pool |

5:00 |

21. Lab - Stream and write to Azure Synapse SQL Dedicated Pool |

5:00 |

22. Lab - Azure Data Lake Storage Credential Passthrough |

10:00 |

23. Lab - Running an automated job |

6:00 |

24. Autoscaling a cluster |

2:00 |

25. Lab - Removing duplicate rows |

3:00 |

26. Lab - Using the PIVOT command |

4:00 |

27. Lab - Azure Databricks Table |

5:00 |

28. Lab - Azure Data Factory - Running a notebook |

6:00 |

29. Delta Lake Introduction |

2:00 |

30. Lab - Creating a Delta Table |

5:00 |

31. Lab - Streaming data into the table |

3:00 |

32. Lab - Time Travel |

2:00 |

33. Quick note on the deciding between Azure Synapse and Azure Databricks |

2:00 |

34. What resources are we taking forward |

1:00 |

| Name of Video | Time |

|---|---|

1. Section Introduction |

1:00 |

2. What is the Azure Key Vault service |

5:00 |

3. Azure Data Factory - Encryption |

5:00 |

4. Azure Synapse - Customer Managed Keys |

3:00 |

5. Azure Dedicated SQL Pool - Transparent Data Encryption |

2:00 |

6. Lab - Azure Synapse - Data Masking |

10:00 |

7. Lab - Azure Synapse - Auditing |

6:00 |

8. Azure Synapse - Data Discovery and Classification |

4:00 |

9. Azure Synapse - Azure AD Authentication |

3:00 |

10. Lab - Azure Synapse - Azure AD Authentication - Setting the admin |

4:00 |

11. Lab - Azure Synapse - Azure AD Authentication - Creating a user |

8:00 |

12. Lab - Azure Synapse - Row-Level Security |

7:00 |

13. Lab - Azure Synapse - Column-Level Security |

4:00 |

14. Lab - Azure Data Lake - Role Based Access Control |

7:00 |

15. Lab - Azure Data Lake - Access Control Lists |

7:00 |

16. Lab - Azure Synapse - External Tables Authorization via Managed Identity |

8:00 |

17. Lab - Azure Synapse - External Tables Authorization via Azure AD Authentication |

5:00 |

18. Lab - Azure Synapse - Firewall |

7:00 |

19. Lab - Azure Data Lake - Virtual Network Service Endpoint |

7:00 |

20. Lab - Azure Data Lake - Managed Identity - Data Factory |

6:00 |

| Name of Video | Time |

|---|---|

1. Best practices for structing files in your data lake |

3:00 |

2. Azure Storage accounts - Query acceleration |

2:00 |

3. View on Azure Monitor |

7:00 |

4. Azure Monitor - Alerts |

8:00 |

5. Azure Synapse - System Views |

2:00 |

6. Azure Synapse - Result set caching |

6:00 |

7. Azure Synapse - Workload Management |

4:00 |

8. Azure Synapse - Retention points |

2:00 |

9. Lab - Azure Data Factory - Monitoring |

7:00 |

10. Azure Data Factory - Monitoring - Alerts and Metrics |

4:00 |

11. Lab - Azure Data Factory - Annotations |

3:00 |

12. Azure Data Factory - Integration Runtime - Note |

7:00 |

13. Azure Data Factory - Pipeline Failures |

3:00 |

14. Azure Key Vault - High Availability |

2:00 |

15. Azure Stream Analytics - Metrics |

3:00 |

16. Azure Stream Analytics - Streaming Units |

2:00 |

17. Azure Stream Analytics - An example on monitoring the stream analytics job |

11:00 |

18. Azure Stream Analytics - The importance of time |

7:00 |

19. Azure Stream Analytics - More on the time aspect |

6:00 |

20. Azure Event Hubs and Stream Analytics - Partitions |

5:00 |

21. Azure Stream Analytics - An example on multiple partitions |

7:00 |

22. Azure Stream Analytics - More on partitions |

4:00 |

23. Azure Stream Analytics - An example on diagnosing errors |

4:00 |

24. Azure Stream Analytics - Diagnostics setting |

6:00 |

25. Azure Databricks - Monitoring |

7:00 |

26. Azure Databricks - Sending logs to Azure Monitor |

3:00 |

27. Azure Event Hubs - High Availability |

6:00 |

Microsoft Azure DP-203 Exam Dumps, Practice Test Questions

100% Latest & Updated Microsoft Azure DP-203 Practice Test Questions, Exam Dumps & Verified Answers!

30 Days Free Updates, Instant Download!

DP-203 Premium Bundle

- Premium File: 397 Questions & Answers. Last update: Jan 20, 2026

- Training Course: 262 Video Lectures

- Study Guide: 1325 Pages

- Latest Questions

- 100% Accurate Answers

- Fast Exam Updates

Microsoft DP-203 Training Course

Want verified and proven knowledge for Data Engineering on Microsoft Azure? Believe it's easy when you have ExamSnap's Data Engineering on Microsoft Azure certification video training course by your side which along with our Microsoft DP-203 Exam Dumps & Practice Test questions provide a complete solution to pass your exam Read More.

Learn Cloud Data Engineering with Microsoft Azure DP-203 Certification Preparation

Comprehensive DP-203 Preparation for Azure Data Engineering Certification

Course Overview

The DP-203: Data Engineering on Microsoft Azure course is designed to equip learners with the skills required to design, implement, and manage modern data solutions on the Azure cloud platform. As organizations increasingly rely on cloud computing to handle vast amounts of structured and unstructured data, the role of a data engineer has become critical for transforming raw information into actionable insights.

Data engineers are responsible for building scalable data pipelines, designing efficient storage solutions, and ensuring that data is accurate, reliable, and secure. This course provides a deep dive into Microsoft Azure services, helping participants develop the technical expertise needed to work with cloud-based tools such as Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Azure Data Lake Storage.

The course also emphasizes practical applications, providing hands-on exercises and real-world scenarios that allow learners to implement end-to-end data engineering solutions. By the end of this course, participants will have a comprehensive understanding of how to manage data workflows, optimize storage, and build analytics-ready environments in the Azure ecosystem.

Whether you are aspiring to become a certified Azure Data Engineer or seeking to enhance your skills in big data analytics and cloud computing, this course offers the foundation and expertise necessary to succeed in a rapidly evolving field.

What You Will Learn from This Course

How to design and implement scalable data storage solutions using Azure Data Lake and Azure Blob Storage.

Techniques for building data pipelines and ETL processes with Azure Data Factory.

Hands-on experience with big data processing using Azure Databricks and Spark-based workflows.

How to analyze large datasets efficiently using Azure Synapse Analytics.

Strategies for integrating streaming data sources with Azure Stream Analytics.

Approaches to ensure data security, privacy, and compliance within cloud environments.

Performance optimization for queries, data processing tasks, and storage solutions.

Monitoring and troubleshooting data pipelines to maintain reliability and consistency.

Implementing role-based access controls and governance policies in Azure.

Preparing for DP-203 certification through practical exercises and knowledge reinforcement.

Learning Objectives

Upon completing this course, participants will be able to:

Understand the fundamental principles of data engineering and the Azure cloud platform.

Design and manage scalable data storage solutions that support structured, semi-structured, and unstructured data.

Develop ETL and ELT workflows to transform and move data between various systems and storage layers.

Leverage Azure Data Factory and Azure Databricks for data processing, cleaning, and transformation.

Build analytics-ready datasets in Azure Synapse Analytics and optimize data queries for performance.

Implement streaming data pipelines and real-time analytics solutions with Azure Stream Analytics.

Apply best practices for data governance, security, and compliance in cloud environments.

Monitor, troubleshoot, and optimize data workflows to ensure operational efficiency.

Gain practical skills and experience that directly prepare for the DP-203 certification exam.

Understand emerging trends and technologies in cloud-based data engineering.

Requirements

To make the most of this course, learners should have:

Basic understanding of data management concepts, databases, and SQL.

Familiarity with programming concepts, preferably in Python or similar languages.

Knowledge of cloud computing principles and services is advantageous but not mandatory.

Access to a Microsoft Azure account to complete hands-on labs and practical exercises.

A willingness to engage with both theoretical concepts and practical implementation of data solutions.

While prior experience in cloud platforms is beneficial, the course is designed to guide learners from foundational concepts to advanced implementation, making it suitable for those with varying levels of technical expertise.

Course Description

This DP-203: Data Engineering on Microsoft Azure course offers a comprehensive journey into the world of cloud-based data engineering. It starts with an introduction to the Azure ecosystem and gradually covers advanced topics such as data pipeline development, big data processing, analytics optimization, and cloud security.

The course focuses on practical, real-world applications of Azure services. Participants will learn how to create and manage data storage solutions that can handle large volumes of structured and unstructured data efficiently. They will gain expertise in designing and implementing ETL pipelines using Azure Data Factory and transforming data using Azure Databricks.

Analytics is another core component of the course. Students will learn how to prepare data for analysis in Azure Synapse Analytics, optimize queries, and create analytics-ready datasets. The course also explores streaming data processing with Azure Stream Analytics, enabling real-time insights and decision-making.

Security and compliance are emphasized throughout the course. Learners will understand how to implement role-based access control, encryption, and data governance policies to ensure that their solutions meet industry standards. Practical exercises reinforce these concepts, allowing learners to apply best practices in real-world scenarios.

By integrating hands-on labs, projects, and guided exercises, this course ensures that participants not only understand theoretical concepts but also develop the skills necessary to implement them effectively in professional environments.

Target Audience

This course is designed for a diverse audience of professionals who want to enhance their skills in cloud-based data engineering. It is particularly suitable for:

Aspiring data engineers seeking to build a career in cloud computing and data analytics.

IT professionals looking to transition to a role focused on data engineering and management.

Data analysts and developers who want to gain expertise in building scalable data solutions.

Cloud architects and system engineers aiming to understand data workflows in Azure.

Students and professionals preparing for the DP-203 Microsoft certification exam.

Organizations seeking to train their teams in modern data engineering practices for better data-driven decision-making.

This course is suitable for individuals at different stages of their careers, providing foundational knowledge for beginners while also covering advanced topics for experienced professionals.

Prerequisites

While this course is designed to be accessible, having certain prerequisites will help learners maximize their experience:

Basic knowledge of databases and SQL: Understanding tables, queries, joins, and indexing is helpful.

Programming experience: Familiarity with Python, Scala, or other scripting languages is beneficial for working with data transformations and processing tasks.

Fundamentals of cloud computing: Knowing cloud concepts such as storage types, compute resources, and networking will help learners navigate the Azure ecosystem.

Analytical mindset: Comfort with analyzing datasets and understanding data relationships will enhance the learning experience.

Problem-solving skills: The ability to troubleshoot issues and optimize workflows is valuable when working on hands-on exercises.

These prerequisites ensure that learners can focus on understanding and applying data engineering concepts rather than struggling with foundational knowledge gaps. Even without extensive experience, participants can follow the course step-by-step to gain practical and theoretical expertise.

Understanding Data Engineering on Azure

Data engineering involves designing systems that efficiently collect, store, process, and analyze large datasets. With the proliferation of cloud platforms like Microsoft Azure, data engineers are increasingly responsible for building solutions that are scalable, secure, and capable of handling high-volume workloads.

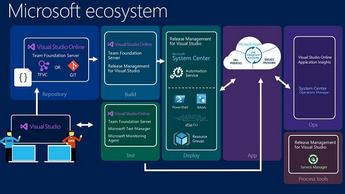

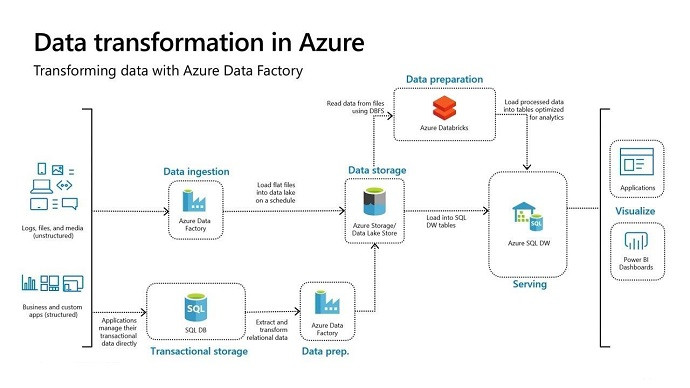

Azure provides a rich ecosystem of services that support end-to-end data engineering. From data ingestion to transformation and analytics, every stage of the data lifecycle can be managed with specialized tools. Azure Data Factory allows for the creation of automated pipelines that move data between different systems. Azure Databricks supports large-scale data processing and machine learning integration. Azure Synapse Analytics enables complex queries and reporting on massive datasets. Azure Data Lake Storage offers cost-effective, scalable storage for structured and unstructured data.

One of the key benefits of using Azure is the ability to integrate these services seamlessly. Data engineers can design workflows that automate routine tasks, monitor data pipelines in real-time, and optimize performance for both batch and streaming data workloads. This integration is particularly important for businesses that rely on data-driven decision-making and require high reliability and speed in their data operations.

The Role of a Data Engineer in Modern Enterprises

Data engineers serve as the backbone of an organization's data infrastructure. Their responsibilities extend beyond simple data movement and storage. They ensure that data is accurate, timely, and available for business intelligence, analytics, and machine learning applications.

A data engineer’s role in an Azure environment often involves:

Designing and implementing scalable storage solutions that accommodate growing data volumes.

Creating automated pipelines to extract, transform, and load data from various sources.

Integrating real-time data streams for immediate insights.

Optimizing data processing tasks for performance and cost efficiency.

Ensuring data security and compliance with regulatory standards.

Collaborating with data analysts, data scientists, and business stakeholders to understand requirements and deliver actionable datasets.

By mastering these responsibilities through a structured course like DP-203, learners gain the expertise needed to contribute significantly to organizational data strategies.

Course Modules/Sections

The DP-203: Data Engineering on Microsoft Azure course is carefully structured into several comprehensive modules to provide a thorough understanding of cloud-based data engineering and practical hands-on experience. The modules are designed to build progressively from foundational concepts to advanced topics, ensuring that learners develop a robust skill set suitable for both professional practice and certification preparation.

The initial module introduces learners to data engineering principles, the Azure ecosystem, and the role of a data engineer within modern enterprises. This module lays the foundation for understanding how structured and unstructured data is collected, stored, and processed using cloud services. It also covers fundamental concepts such as data storage types, cloud architecture, and data pipelines.

The second module focuses on data ingestion and transformation, providing detailed guidance on building extract, transform, and load workflows using Azure Data Factory. Participants learn how to design pipelines that move data efficiently between various sources and destinations, automate workflows, and handle errors and exceptions in a controlled manner. The module also introduces Azure Databricks as a powerful platform for large-scale data processing and transformation.

The third module delves into advanced analytics, teaching learners how to optimize data for analysis using Azure Synapse Analytics. This module covers creating and managing analytics-ready datasets, optimizing query performance, and integrating batch and streaming data workflows for comprehensive business intelligence and reporting.

A separate module is dedicated to data security, governance, and compliance. In this section, learners explore best practices for implementing role-based access control, encryption, data masking, and auditing. Emphasis is placed on maintaining regulatory compliance while ensuring that data pipelines are both reliable and secure.

Additional modules include real-time data processing, where learners work with streaming data using Azure Stream Analytics, and monitoring and optimization of pipelines for performance efficiency. Hands-on labs and practical exercises are embedded within every module to reinforce learning and encourage application of theoretical concepts in real-world scenarios.

By the end of these modules, learners gain an end-to-end understanding of data engineering on Microsoft Azure, from designing scalable storage solutions to implementing fully automated, secure, and optimized data pipelines for enterprise environments.

Key Topics Covered

The course covers a wide array of topics critical to mastering data engineering on Microsoft Azure. The first set of topics focuses on data storage and management. Learners explore Azure Data Lake Storage, Azure Blob Storage, and relational database services in Azure. They understand how to design storage solutions that accommodate structured, semi-structured, and unstructured data efficiently while ensuring scalability and cost-effectiveness.

The second set of topics delves into data integration and pipeline development. Participants gain hands-on experience designing ETL and ELT workflows using Azure Data Factory. They learn to schedule and orchestrate data movement, perform transformations, and monitor pipeline execution. Topics also include integrating various data sources, handling failures and retries, and applying logging and alerting mechanisms to ensure operational reliability.

Data processing and transformation form another major focus area. Learners work with Azure Databricks to perform large-scale data processing using Spark-based frameworks. Topics include batch processing, data cleansing, aggregations, and preparing datasets for analytics or machine learning. The course also covers optimization techniques to improve performance and reduce processing time in big data environments.

Analytics and reporting are addressed in depth through the study of Azure Synapse Analytics. Topics include designing analytics-ready datasets, writing optimized queries, performing data modeling, and integrating batch and streaming data for comprehensive analysis. Learners also explore visualization tools and dashboards that facilitate decision-making based on processed data.

Real-time data processing is another critical topic. Participants work with Azure Stream Analytics to process streaming data from multiple sources, apply transformations, and generate actionable insights in near real-time. They learn how to implement event-driven architectures, detect anomalies, and integrate streaming outputs with storage and analytics systems.

Security and governance are consistently integrated throughout the curriculum. Topics include implementing role-based access control, data encryption at rest and in transit, auditing and monitoring access, and maintaining compliance with industry regulations. This ensures that learners understand how to manage sensitive data securely while designing and deploying data pipelines.

Performance optimization is also covered extensively. Learners explore query optimization, indexing strategies, partitioning, and caching techniques to improve the efficiency of data processing and analytics workloads. Monitoring and troubleshooting methods are included to help learners detect bottlenecks and implement corrective measures effectively.

Finally, career-oriented topics are woven throughout the course. These include preparing for the DP-203 certification, understanding industry use cases, and gaining insights into emerging trends in cloud-based data engineering, such as serverless architectures, real-time analytics, and big data ecosystem integration.

Teaching Methodology

The teaching methodology for the DP-203: Data Engineering on Microsoft Azure course combines theoretical instruction with extensive practical exercises, ensuring that learners gain both conceptual knowledge and hands-on experience. Lectures and presentations provide a clear understanding of fundamental principles, architecture, and workflows in cloud-based data engineering. Each concept is explained with real-world examples that illustrate how organizations use Azure services to manage, process, and analyze data efficiently.

Hands-on labs are a cornerstone of the teaching methodology. Learners are guided step-by-step to implement data pipelines, build ETL workflows, and perform analytics tasks in live Azure environments. These exercises provide an interactive learning experience and reinforce the concepts taught in lectures. By working with actual Azure services such as Data Factory, Databricks, Synapse Analytics, and Data Lake Storage, participants develop practical skills that are directly transferable to professional settings.

Project-based learning is integrated into the curriculum, allowing learners to work on end-to-end data engineering solutions. This approach encourages problem-solving, critical thinking, and creativity as students design, implement, and optimize their data pipelines and analytics workflows. Projects are designed to simulate real-world scenarios, helping learners understand challenges such as data quality issues, pipeline failures, and performance bottlenecks.

Instructor-led sessions are complemented by online resources, documentation, and interactive tutorials. Learners are encouraged to explore Azure documentation, participate in discussion forums, and collaborate with peers to reinforce understanding. The course also emphasizes iterative learning, where students repeatedly test, refine, and optimize their solutions to build confidence and mastery over the tools and concepts.

Assessment and feedback are integral to the teaching methodology. Instructors provide continuous guidance, identify areas for improvement, and offer recommendations for optimizing workflows and solutions. This ensures that learners not only acquire knowledge but also develop the competence and confidence required to execute data engineering tasks effectively.

Assessment & Evaluation

Assessment and evaluation in the DP-203 course are designed to measure both theoretical understanding and practical proficiency in data engineering on Microsoft Azure. Learners are evaluated through a combination of quizzes, hands-on labs, project submissions, and participation in discussions. These assessments ensure that participants have a well-rounded grasp of the concepts and can apply them in real-world scenarios.

Quizzes are used throughout the course to test comprehension of key concepts such as data storage design, pipeline orchestration, data transformation, and analytics optimization. These assessments provide immediate feedback and help learners identify areas that require additional focus. The quizzes also familiarize participants with the types of questions they may encounter in the DP-203 certification exam.

Hands-on lab exercises serve as practical assessments, requiring learners to implement ETL workflows, build data pipelines, and optimize analytics processes in Azure. These labs are evaluated based on the correctness, efficiency, and scalability of the solutions, ensuring that participants can translate theoretical knowledge into practical outcomes. Learners are encouraged to troubleshoot issues, apply best practices, and refine their solutions under guided supervision.

Project-based assessments provide a comprehensive evaluation of learners’ skills. Participants are tasked with designing end-to-end data engineering solutions, integrating multiple Azure services, and presenting their workflows and findings. Projects are assessed on criteria such as architectural design, pipeline efficiency, data transformation accuracy, security implementation, and the ability to generate actionable analytics. This holistic approach prepares learners for professional tasks and the DP-203 certification exam.

Instructor feedback is an essential part of the evaluation process. Detailed reviews of lab exercises and projects highlight strengths, identify gaps, and provide actionable recommendations for improvement. This iterative feedback ensures that learners progressively enhance their skills and gain confidence in their ability to execute data engineering tasks.

Continuous monitoring and evaluation of participation and engagement in discussions and collaborative exercises are also factored into the overall assessment. This approach encourages active learning, peer interaction, and the sharing of insights, fostering a deeper understanding of data engineering concepts and their practical application in Microsoft Azure environments.

By combining quizzes, labs, projects, and feedback-driven assessments, the course ensures that learners develop a comprehensive skill set in data engineering, from designing scalable storage solutions to implementing optimized pipelines and analytics workflows in the cloud.

Benefits of the Course

The DP-203: Data Engineering on Microsoft Azure course offers a wide range of benefits for learners looking to excel in cloud-based data engineering and analytics. One of the primary advantages of this course is the acquisition of practical, hands-on experience with Microsoft Azure services. By working directly with tools such as Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Azure Data Lake Storage, learners gain a strong understanding of how to design and implement scalable, secure, and high-performance data solutions. This practical knowledge is highly sought after in today’s data-driven business environment, where organizations rely on efficient cloud solutions to manage and analyze large volumes of data.

Another significant benefit of the course is the alignment with industry standards and best practices. Participants learn how to optimize data pipelines, implement security protocols, and manage governance and compliance requirements. This ensures that the skills acquired are directly applicable to real-world scenarios, helping learners to deliver reliable and efficient data solutions within enterprise environments. Knowledge of these best practices also provides learners with the confidence to handle complex projects and troubleshoot issues effectively.

The course also prepares participants for the DP-203 certification exam, a recognized credential for data engineers working with Microsoft Azure. Certification demonstrates proficiency in designing and implementing cloud-based data solutions and enhances career prospects in cloud computing, big data analytics, and enterprise data management. The course’s project-based learning approach allows learners to simulate real-world scenarios, providing experience that is valuable both for certification preparation and professional development.

In addition to technical skills, the course fosters analytical thinking and problem-solving abilities. Participants learn to analyze large datasets, design optimized workflows, and implement efficient data processing strategies. This combination of technical expertise and analytical capability ensures that learners can contribute to strategic decision-making processes within their organizations, providing actionable insights derived from well-managed data.

Another key benefit is the flexibility and adaptability of the skills gained. The knowledge of building data pipelines, processing large datasets, and optimizing analytics workflows can be applied to multiple industries, including finance, healthcare, retail, technology, and government sectors. As businesses increasingly migrate their data infrastructure to cloud platforms, the ability to implement scalable and secure data solutions in Azure becomes a critical asset for any data engineering professional.

Finally, learners gain exposure to emerging technologies and trends in cloud-based data engineering. The course emphasizes innovations such as serverless architectures, real-time analytics, and integration of AI and machine learning workflows. This forward-looking perspective ensures that participants are not only skilled in current practices but also prepared for evolving demands in the data engineering landscape.

Course Duration

The DP-203: Data Engineering on Microsoft Azure course is designed to provide comprehensive coverage of all key topics while allowing sufficient time for hands-on practice and project completion. Typically, the course is structured over a duration of four to six weeks when pursued through full-time learning, depending on the pace of instruction and learner engagement. For part-time or self-paced learners, the course can extend over eight to twelve weeks, allowing participants to balance professional commitments while gaining in-depth knowledge and practical experience.

The duration is carefully planned to ensure that learners have adequate time to master foundational concepts before progressing to advanced topics. The first week is usually dedicated to understanding the Azure ecosystem, cloud computing fundamentals, and the principles of data engineering. This foundational knowledge serves as the basis for building data pipelines, working with large datasets, and designing scalable data solutions.

Subsequent weeks focus on practical application and skill development. Learners spend time implementing ETL and ELT workflows using Azure Data Factory, performing large-scale data processing with Azure Databricks, and preparing analytics-ready datasets in Azure Synapse Analytics. Each week combines lectures, demonstrations, and hands-on labs to ensure learners can translate theoretical knowledge into practical skills.

Time is also allocated for real-time data processing, security, and governance modules, which include designing role-based access control, encryption, and monitoring data pipelines for compliance. Learners are encouraged to experiment with various pipeline architectures, data transformations, and optimization strategies to reinforce their understanding.

The final weeks typically focus on project-based learning and certification preparation. Participants work on end-to-end data engineering projects, integrating multiple Azure services, optimizing workflows, and ensuring security and compliance. This approach allows learners to consolidate their skills, gain confidence in their abilities, and prepare effectively for the DP-203 certification exam.

The structured course duration ensures that participants achieve a balance between comprehensive theoretical understanding and extensive practical experience. By the end of the course, learners are equipped to design, implement, and manage cloud-based data solutions efficiently, making them highly competitive in the job market.

Tools & Resources Required

To successfully complete the DP-203: Data Engineering on Microsoft Azure course, learners need access to a combination of software tools, cloud services, and educational resources. The primary tool required is a Microsoft Azure account, which provides access to services such as Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Azure Data Lake Storage. These services form the core platform for building, processing, and analyzing data in cloud environments. Hands-on experience with these tools is essential for reinforcing theoretical knowledge and gaining practical skills.

In addition to Azure services, learners benefit from familiarity with programming languages commonly used in data engineering workflows. Python is frequently used for scripting data transformations and interacting with APIs, while SQL is critical for querying, aggregating, and optimizing datasets. Knowledge of Python and SQL allows learners to implement efficient data processing workflows and perform complex analytics tasks within the Azure ecosystem.

Educational resources such as official Microsoft documentation, tutorials, and online guides supplement the course curriculum. These resources provide detailed explanations of Azure services, best practices for pipeline design, and troubleshooting guidance. Learners are encouraged to explore these materials to deepen their understanding and expand their problem-solving capabilities.

Integrated development environments (IDEs) and code editors, such as Visual Studio Code, are recommended for writing scripts, managing notebooks, and executing data processing tasks. Azure Databricks notebooks provide an interactive interface for working with large datasets, performing transformations, and running machine learning models. Familiarity with these tools enhances the learning experience and allows participants to experiment with different data engineering approaches.

Version control tools such as Git may also be useful for managing code, tracking changes, and collaborating on projects. While not mandatory, using version control supports best practices in professional data engineering environments and helps learners develop skills that are highly valued in the industry.

Finally, participants need access to stable internet connectivity and a computer capable of running cloud-based applications efficiently. The combination of cloud services, programming tools, and educational resources ensures that learners can engage fully with the course content, complete hands-on exercises, and build practical expertise in data engineering on Microsoft Azure.

Designing Scalable Data Storage Solutions

One of the fundamental components of data engineering on Azure is designing scalable storage solutions that support structured, semi-structured, and unstructured data. Azure Data Lake Storage offers a hierarchical namespace that allows organizations to store large datasets efficiently while providing access control and security features. Data engineers learn to design folder structures, manage access permissions, and optimize storage for performance and cost-effectiveness.

Azure Blob Storage complements Data Lake Storage by providing cost-effective object storage for unstructured data. Learners explore strategies for partitioning, indexing, and managing large datasets to ensure fast retrieval and efficient processing. These storage solutions form the backbone of cloud-based data engineering workflows, supporting everything from batch processing to real-time analytics.

Data modeling and organization are also emphasized in this module. Participants learn how to structure data for optimal query performance, manage metadata, and ensure data integrity across multiple storage layers. Understanding these principles is critical for building pipelines that are both scalable and reliable, enabling organizations to leverage their data effectively for analytics and business intelligence.

Implementing ETL and ELT Workflows

Extract, transform, and load (ETL) workflows are central to data engineering on Azure. Participants gain practical experience designing pipelines using Azure Data Factory to move data from source systems to target storage, applying transformations along the way. The course covers both batch and streaming ETL scenarios, ensuring that learners can handle diverse data processing requirements.

Azure Databricks provides a platform for performing large-scale data transformations using Spark. Learners practice cleaning, aggregating, and enriching datasets, preparing them for analytics and machine learning. Emphasis is placed on optimizing performance, handling exceptions, and ensuring data quality throughout the workflow.

By mastering ETL and ELT workflows, learners gain the ability to automate data processing tasks, improve efficiency, and maintain consistency across complex datasets. This skill set is critical for modern enterprises that rely on accurate and timely data for decision-making.

Advanced Analytics with Azure Synapse

Azure Synapse Analytics enables comprehensive analysis of large datasets, combining data warehousing and big data capabilities. Learners explore techniques for creating analytics-ready datasets, optimizing queries, and integrating batch and streaming data. This allows organizations to generate actionable insights and support data-driven decision-making.

Topics include query optimization, indexing, partitioning, and caching strategies to improve performance. Participants also learn how to design data models that facilitate reporting, visualization, and predictive analytics. By mastering these capabilities, learners can provide scalable analytics solutions that meet the demands of modern businesses.

Career Opportunities

Completing the DP-203: Data Engineering on Microsoft Azure course opens up a wide range of career opportunities in the rapidly growing field of cloud data engineering. Organizations across industries, from finance and healthcare to technology and retail, are increasingly relying on data engineers to manage large-scale data systems, ensure the reliability of data pipelines, and support business intelligence initiatives. The skills acquired in this course are in high demand, as companies seek professionals capable of designing and implementing cloud-based data solutions with efficiency, scalability, and security in mind.

Graduates of this course are well-prepared for roles such as Azure Data Engineer, Data Pipeline Developer, Cloud Data Architect, and Business Intelligence Engineer. These roles typically involve building and maintaining complex data pipelines, transforming raw data into actionable insights, and enabling real-time analytics using cloud platforms like Azure. By mastering tools such as Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Azure Data Lake Storage, learners gain the practical expertise required to handle large volumes of structured, semi-structured, and unstructured data, making them highly competitive candidates in the job market.

Data engineers certified in DP-203 also find opportunities to work on advanced analytics projects, integrating machine learning models, predictive analytics, and real-time data processing. Organizations value professionals who can optimize workflows, ensure data quality, and implement secure and compliant data management practices. In addition to technical proficiency, graduates of this course develop problem-solving skills, analytical thinking, and the ability to collaborate with cross-functional teams, all of which are essential for career growth in data engineering.

The course also provides a solid foundation for transitioning into leadership and strategic roles, such as Cloud Solutions Architect or Data Engineering Team Lead. These positions involve overseeing the design and implementation of enterprise-wide data solutions, defining data strategies, and mentoring junior engineers. Professionals who complete this course and achieve DP-203 certification are recognized as qualified experts in the Azure ecosystem, enhancing their credibility and opening doors to higher-level responsibilities and increased earning potential.

Beyond technical roles, the skills gained in this course are applicable to consulting, advisory, and freelance opportunities. Certified data engineers can assist organizations in migrating data infrastructure to the cloud, optimizing existing workflows, and designing solutions for improved analytics and reporting. The versatility of the knowledge and practical skills acquired in the DP-203 course ensures that graduates can adapt to evolving industry needs and pursue diverse career paths across multiple sectors.

By completing this course, learners position themselves for a rewarding and dynamic career in the cloud data engineering domain. They gain not only technical proficiency but also the confidence to manage complex projects, drive data-driven initiatives, and contribute to organizational success through innovative cloud-based solutions.

Enroll Today

Enrolling in the DP-203: Data Engineering on Microsoft Azure course is the first step toward mastering cloud-based data engineering and advancing your career in the rapidly evolving field of data analytics. The course provides a structured learning path that combines theoretical knowledge with extensive hands-on practice, ensuring that participants develop both conceptual understanding and practical expertise.

By enrolling, learners gain access to comprehensive modules covering data storage, ETL and ELT workflows, big data processing, analytics optimization, real-time streaming, and data security. Each module includes interactive exercises, guided labs, and project-based assignments that reinforce learning and allow participants to apply concepts in real-world scenarios. This immersive approach ensures that learners are not only prepared for the DP-203 certification exam but also equipped with the skills needed to implement scalable, secure, and high-performance data solutions in professional settings.

Participants also benefit from exposure to the latest tools and technologies within the Azure ecosystem. The course emphasizes best practices for designing and managing data pipelines, optimizing analytics workflows, and ensuring compliance with regulatory standards. Learners gain proficiency with Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Azure Data Lake Storage, enabling them to build robust cloud-based data infrastructure that meets modern business requirements.

Enrolling in this course also connects learners with expert instructors and a community of peers, fostering collaboration, knowledge sharing, and problem-solving. Participants receive continuous guidance, feedback, and support throughout the program, enhancing the learning experience and ensuring mastery of core concepts. Project-based exercises and real-world case studies allow learners to tackle complex challenges, develop critical thinking skills, and gain confidence in their ability to design and execute effective data engineering solutions.

Whether you are an aspiring data engineer, a cloud professional seeking to enhance your skills, or someone preparing for DP-203 certification, this course provides the knowledge, tools, and experience necessary to achieve your goals. Enrollment opens the door to new career opportunities, including roles as Azure Data Engineer, Cloud Data Architect, Data Pipeline Developer, and more. With the growing demand for skilled professionals in cloud data engineering, taking this step today can position you for long-term career success and professional growth.

By choosing to enroll, learners embark on a transformative journey that equips them with both the technical and analytical skills required to thrive in modern enterprises. The combination of structured modules, hands-on practice, expert guidance, and project-based learning ensures that participants are fully prepared to meet the challenges of cloud-based data engineering and capitalize on the increasing opportunities in this high-demand field.

Prepared by Top Experts, the top IT Trainers ensure that when it comes to your IT exam prep and you can count on ExamSnap Data Engineering on Microsoft Azure certification video training course that goes in line with the corresponding Microsoft DP-203 exam dumps, study guide, and practice test questions & answers.

Purchase Individually

Microsoft Training Courses

Only Registered Members can View Training Courses

Please fill out your email address below in order to view Training Courses. Registration is Free and Easy, You Simply need to provide an email address.

- Trusted by 1.2M IT Certification Candidates Every Month

- Hundreds Hours of Videos

- Instant download After Registration